A modern guide to astrophotography on macOS: the best Mac-compatible apps for planetary and deep-sky capture, guiding, stacking, plate solving, and processing—plus practical workflows that actually work in 2025-2026.

Space Station Passes: A Free, Ad-Free Way to Track Satellites

Planet Stacker X: A macOS-Native Planetary Imaging Tool from Rain City Astro

LuckyStackWorker: The Best Free Mac Software for Sharpening Planetary, Lunar, and Solar Astrophotography Images

Looking for a powerful, free tool to enhance your planetary, lunar, and solar astrophotography? LuckyStackWorker offers automated batch processing, adaptive sharpening, and advanced noise reduction—perfect for refining stacked images on macOS, Windows, and Linux. Download it today and take your astrophotography to the next level!

Takahashi Epsilon 160D Review: A Modern Evolution in Wide-Field Astrographs

The Role of AI in Astrophotography Image Processing: Tools and Controversies

Harmonic Drive vs. Worm Gear Mounts: How Strain Wave Technology is Changing Astrophotography

Learn how Harmonic Drive mounts differ from traditional worm gear mounts, their impact on astrophotography performance, and an overview of popular models from ZWO, Sky-Watcher, Pegasus Astro, and more.

Harmonic Drive mounts, also known as Strain Wave Gear mounts, have been gaining traction in the astrophotography community due to their unique design and performance advantages over traditional worm gear mounts. In this guide, we'll explore the distinctions between these two types of mounts and highlight some notable Harmonic Drive mounts from manufacturers like ZWO, Sky-Watcher, Pegasus Astro, and others.

Understanding the Difference: Harmonic Drive vs. Worm Gear Mounts

Traditional worm gear mounts utilize a worm screw meshing with a worm wheel to achieve the necessary gear reduction for precise tracking. While effective, this design can suffer from backlash—a slight lag when reversing direction—due to the inherent gaps between the meshing gears. Backlash can affect tracking accuracy, especially during long-exposure astrophotography.

In contrast, Harmonic Drive mounts employ a strain wave gearing system comprising three main components: a wave generator, a flexspline, and a circular spline. The wave generator deforms the flexspline, causing it to engage with the circular spline in a manner that ensures a large number of teeth are in contact at any given time. This design virtually eliminates backlash, offering smoother and more precise tracking. Additionally, Harmonic Drive mounts are typically more compact and lightweight, often eliminating the need for counterweights, which enhances portability—a significant advantage for field astrophotographers.

Impact on Performance

The zero-backlash characteristic of Harmonic Drive mounts leads to improved tracking accuracy, which is crucial for capturing sharp images during long exposures. The high torque capacity of strain wave gears allows these mounts to handle substantial payloads relative to their size, further contributing to their appeal among astrophotographers seeking a balance between portability and performance. However, it's worth noting that while Harmonic Drive mounts offer these advantages, they may exhibit higher periodic error compared to well-tuned worm gear mounts. This periodic error can be mitigated through autoguiding techniques, but it's an important consideration for users aiming for the utmost precision in their imaging.

Popular Harmonic Drive Mounts

Here are some notable Harmonic Drive mounts from various manufacturers:

ZWO AM5: The ZWO AM5 is a compact and lightweight mount that supports up to 13 kg without a counterweight and 20 kg with one. It operates in both equatorial and alt-azimuth modes, providing versatility for different observational needs. The mount integrates seamlessly with ZWO's ASIAIR system, allowing for wireless control and an intuitive user interface.

Sky-Watcher 100i: The Sky-Watcher 100i is an affordable Harmonic Drive mount priced at $1,695. It offers a payload capacity of 10 kg without a counterweight and 15 kg with one, making it a compelling option for those seeking a balance between cost and performance.

Pegasus Astro NYX-101: The NYX-101 is designed for astrophotographers requiring advanced tracking capabilities and remote operation features. It boasts a payload capacity of up to 20 kg without counterweights and 30 kg with them, positioning it as one of the more powerful Harmonic Drive mounts available.

Rainbow Astro RST-135: Weighing only 3.3 kg, the RST-135 is one of the lightest Harmonic Drive mounts on the market. Despite its lightweight design, it can support up to 13.5 kg without a counterweight and 18 kg with one. Its exceptional tracking accuracy and portability make it an excellent choice for astrophotographers on the move.

iOptron HEM27: The iOptron HEM27 is a budget-friendly hybrid Harmonic Drive mount that combines a strain-wave drive system with traditional worm gears for added stability. It supports a payload capacity of 13.5 kg without a counterweight and 20 kg with one, offering a good balance between performance and affordability.

Conclusion

Harmonic Drive mounts present a compelling alternative to traditional worm gear mounts, especially for astrophotographers prioritizing portability and reduced backlash. While they come at a higher price point and may require considerations for periodic error correction, their advantages make them worthy of consideration for both amateur and seasoned astrophotographers.

If you're interested in purchasing a Harmonic Drive mount, consider supporting Mac Observatory by visiting Agena Astro. By using our affiliate link, you help us to continue to produce astrophotography articles and reviews.

Celestron EdgeHD 11 Review: The Ultimate Telescope for Planetary and Deep-Sky Astrophotography

A review of the Celestron EdgeHD 11” SCT, covering its optical performance, imaging versatility at f/10, f/7, and f/1.9, and the best accessories to enhance its capabilities.

The Celestron EdgeHD 11” Schmidt-Cassegrain Telescope (SCT) is an ambitious optical system designed for serious astronomers and astrophotographers who demand high-quality, flat-field performance. This telescope represents a significant leap over traditional SCTs, addressing long-standing issues such as field curvature and off-axis coma—common problems that degrade image quality at the edges of the field. With an 11-inch (280mm) aperture, a native focal length of 2800mm (f/10), and the ability to operate at f/7 and even f/1.9 with accessories, the EdgeHD 11 is one of the most versatile optical systems available today.

But with great power comes great responsibility—specifically, the need for a robust mount, precise collimation, and careful thermal management. In this deep-dive review, we’ll explore the design, performance, challenges, and solutions to help you determine if this telescope is the right fit for your astrophotography ambitions.

My first heavy duty mount, the Celestron CGX holding firm with the EdgeHD 11.

Design and Optical Innovations: Why EdgeHD?

Celestron’s EdgeHD series builds upon their classic Schmidt-Cassegrain design by incorporating a corrective optical system inside the baffle tube. Traditional SCTs suffer from field curvature—a natural result of their optical design—which causes stars at the edges of an image to appear elongated or out of focus. This isn’t a major problem for visual astronomy, but for astrophotography, where large image sensors capture wide fields of view, the distortion becomes obvious.

The EdgeHD’s corrective optics flatten the field, ensuring sharp, round stars across even the largest imaging sensors. The result? A telescope that performs exceptionally well for deep-sky imaging without requiring additional field flatteners.

Key Features of the EdgeHD Design

Flat, coma-free field: Ideal for imaging with full-frame cameras

Internal mirror locks: Reduce mirror shift and improve focus stability

Cooling vents with integrated filters: Expedite temperature equalization

Fastar/HyperStar compatibility: Enables ultra-fast imaging at f/1.9

This system makes the EdgeHD 11 a top choice for astrophotography, but its design also benefits visual observers by providing exceptionally sharp views with pinpoint stars across the entire field.

Setup and Handling: A Heavyweight Contender

The EdgeHD 11” is a beast—and that’s something prospective buyers should take seriously. The optical tube assembly (OTA) alone weighs 28 lbs (12.5 kg), but once you add a diagonal, eyepieces, a camera, guiding equipment, and mounting hardware, the total payload can easily exceed 35-40 lbs. This weight demands a sturdy, high-capacity mount to ensure stable tracking, especially at the native 2800mm focal length, where even tiny tracking errors become exaggerated.

Mount Recommendations

For visual use, a Celestron CGEM II or Losmandy G11 can handle the load, though an equatorial mount is still recommended for tracking stability.

For astrophotography, a mount with at least a 50 lb payload capacity is required to ensure accurate guiding at long focal lengths. Some of the best options include:

Celestron CGX / CGX-L – A solid choice for EdgeHD users looking for an integrated system

iOptron CEM70 / CEM120 – A lightweight yet powerful mount with excellent tracking accuracy for long-exposure imaging

10Micron GM1000 HPS – A premium mount with absolute encoders, allowing unguided imaging at long focal lengths

Astro-Physics Mach2 GTO – A top-tier mount with high precision and smooth tracking for serious astrophotographers

Software Bisque Paramount MYT – A high-end option designed for professional-level imaging

My EdgeHD 1100 on a 10Micron GM1000 mount.

Performance: What the EdgeHD 11 Excels At

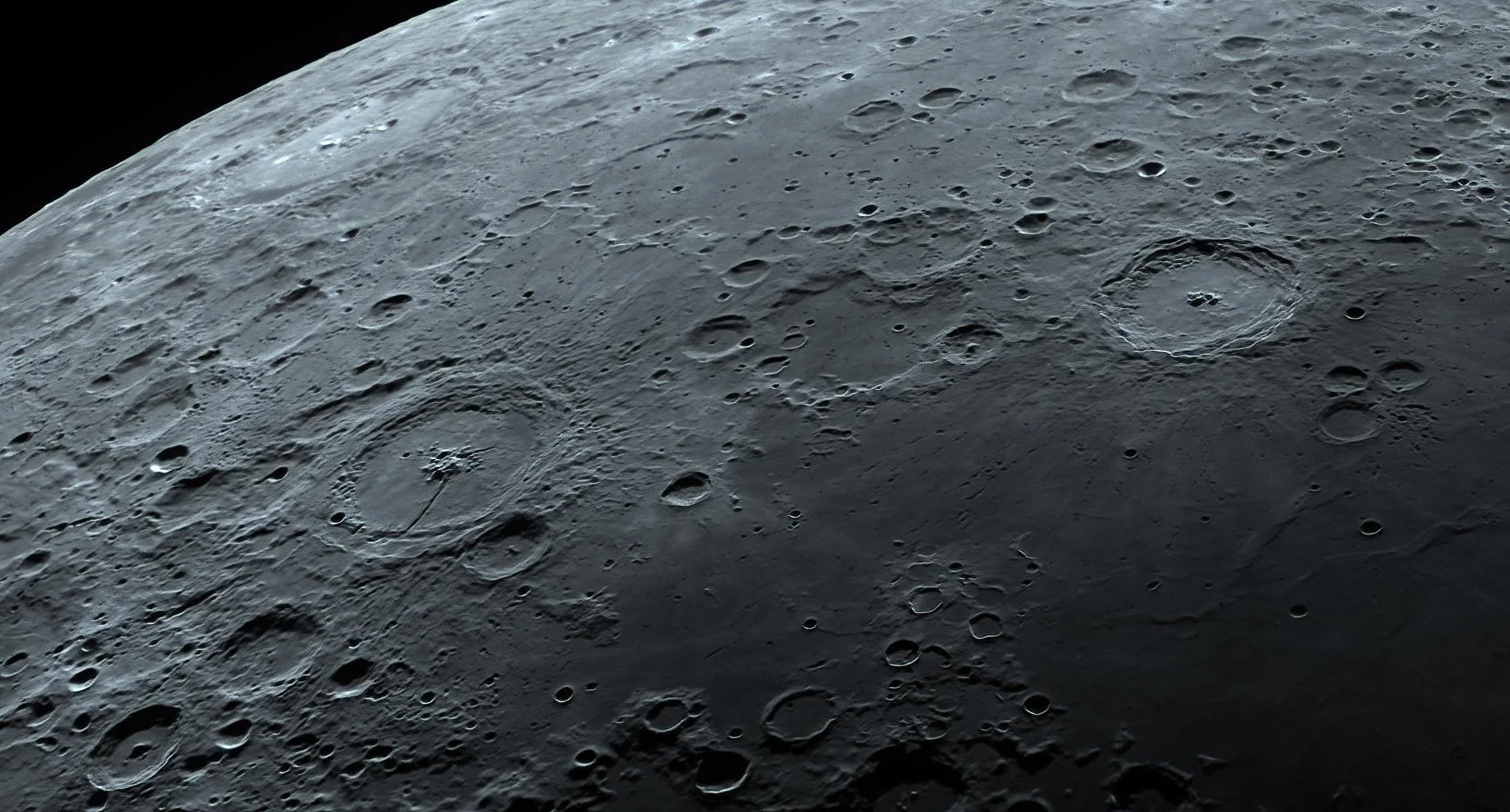

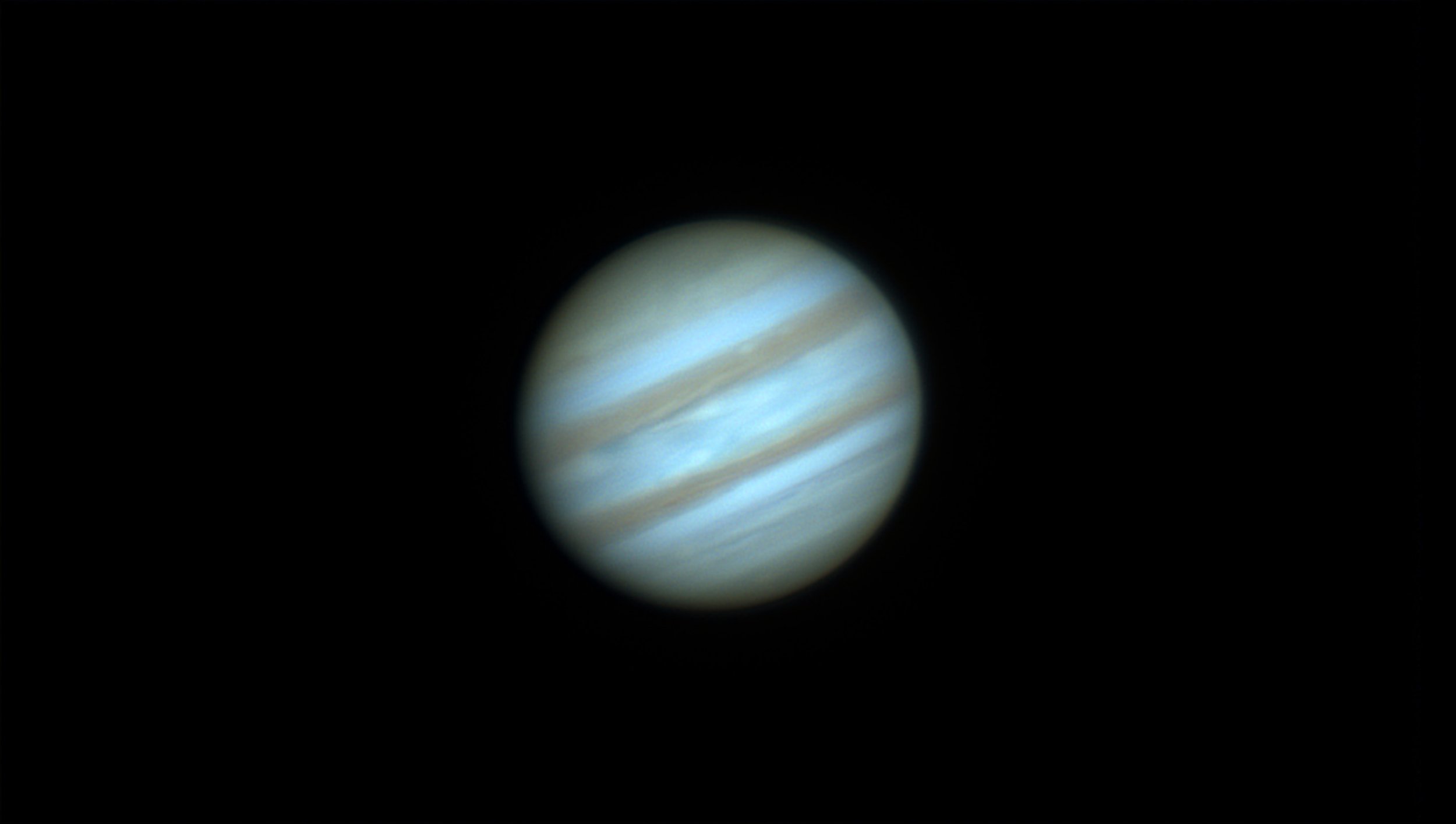

Sharp, High-Contrast Planetary and Lunar Observing

At f/10, the EdgeHD 11 shines as a planetary and lunar powerhouse. With 2800mm of focal length, it provides high-resolution views of the Moon’s craters, Jupiter’s cloud belts, and Saturn’s rings. The contrast is excellent, especially with high-quality eyepieces or planetary cameras like the ZWO ASI482MC.

However, achieving perfect focus can be tricky due to mirror shift, a common issue with SCTs. The built-in mirror locks help stabilize focus, but some users prefer adding a Crayford-style focuser to eliminate focus shift entirely.

Deep-Sky Imaging at f/10 and f/7

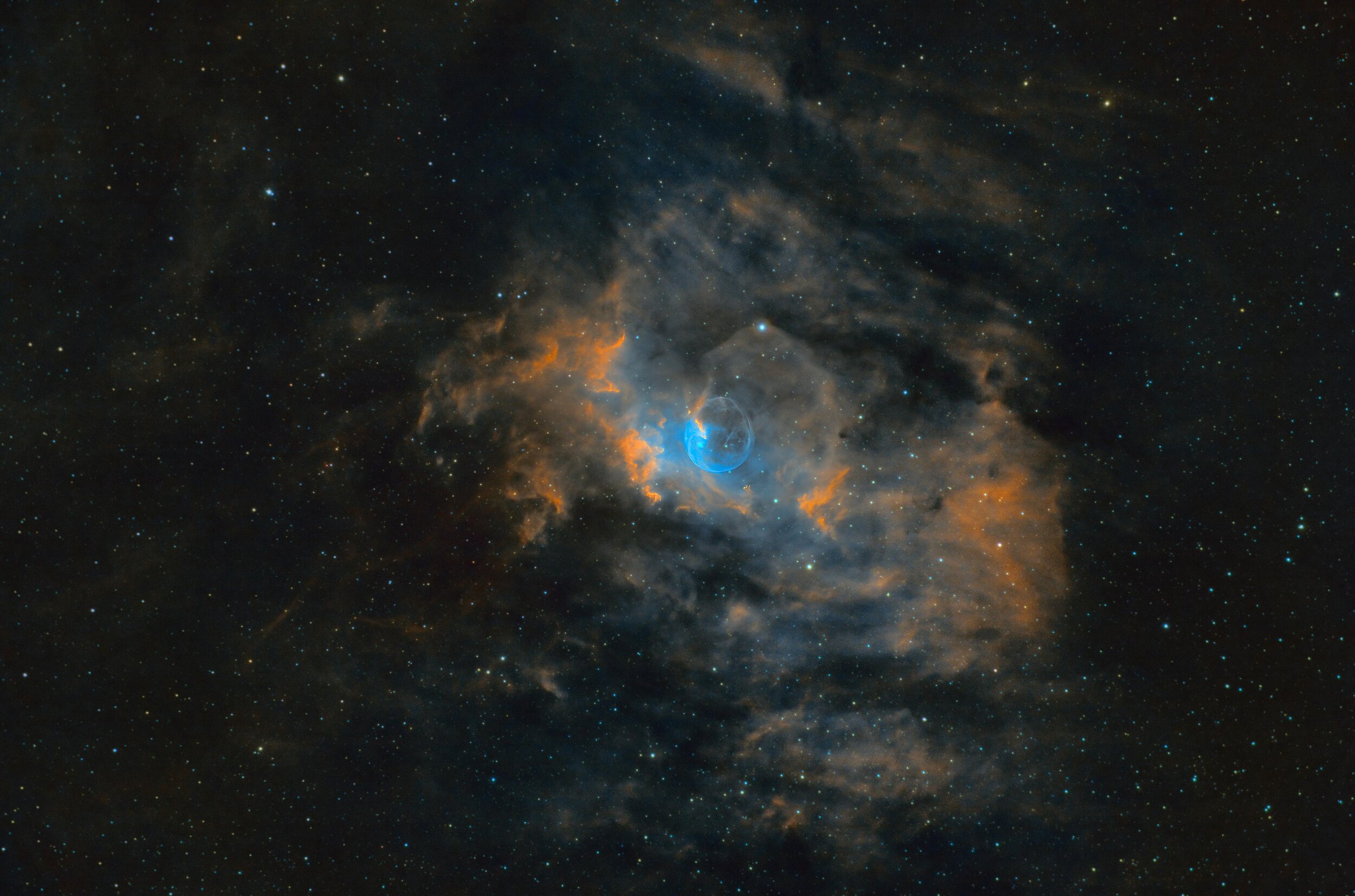

For deep-sky astrophotography, f/10 provides incredible detail on small, distant objects such as planetary nebulae and galaxies. However, at 2800mm focal length, guiding must be precise, and exposure times can be long.

To make deep-sky imaging more practical, Celestron offers a 0.7x focal reducer, which shortens the focal length to 1960mm (f/7). This:

Increases the field of view – Ideal for capturing larger nebulae

Reduces exposure times by 50% – Allowing for more efficient imaging sessions

Maintains the flat-field design – No additional field correctors needed

While f/7 is a great improvement, it still requires longer exposures and accurate guiding. That’s where HyperStarcomes in.

“Half-pipe” within the Soul Nebula.

HyperStar and Ultra-Fast Imaging at f/1.9

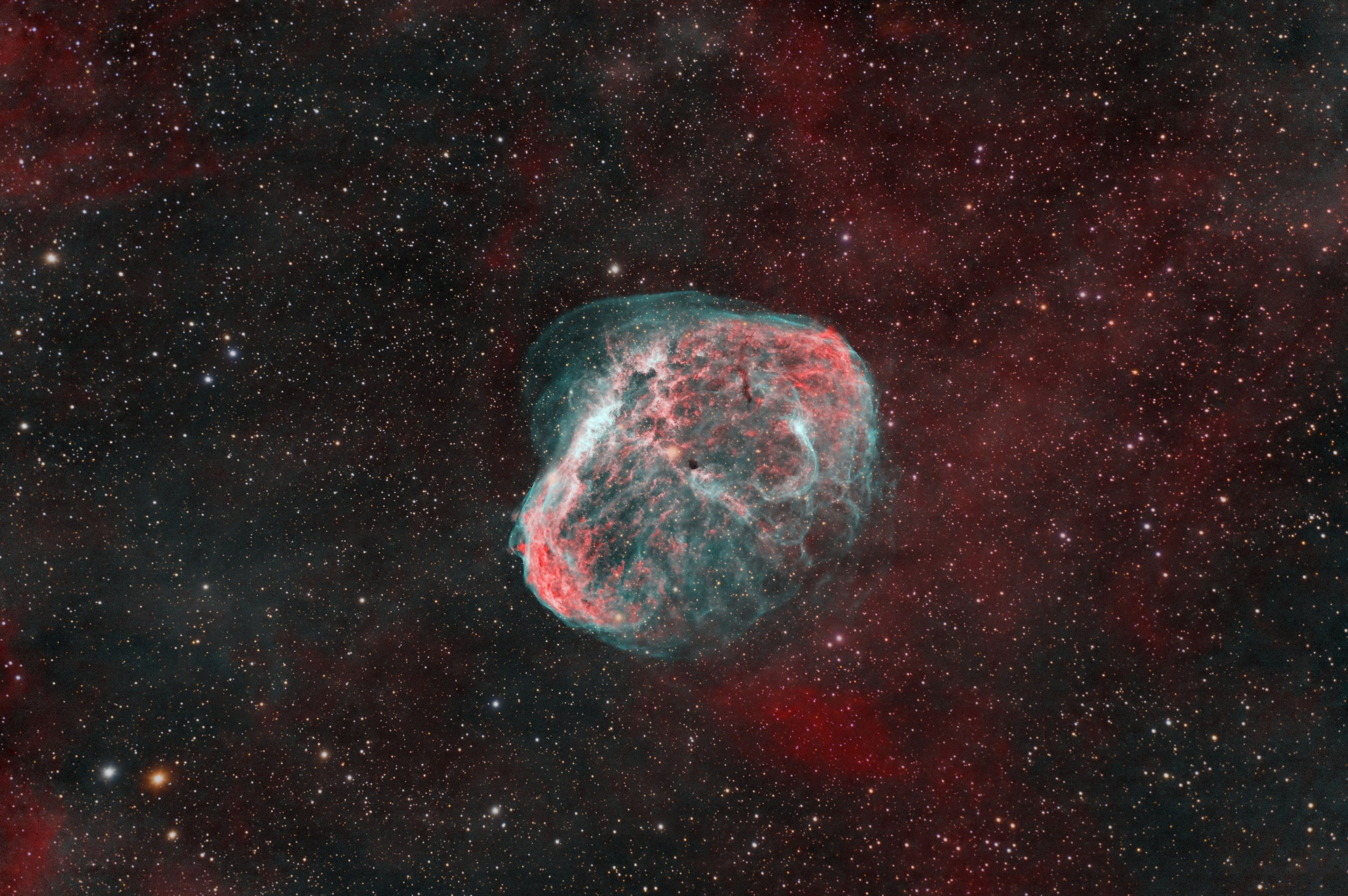

One of the most exciting features of the EdgeHD 11” is its compatibility with Starizona’s HyperStar V4, which converts the telescope into an f/1.9 astrophotography system. This transforms the focal length from 2800mm to just 560mm, dramatically changing how the telescope is used.

Why HyperStar is a Game-Changer

Extremely short exposure times – Capture deep-sky objects in seconds instead of minutes

Ultra-wide field of view – Perfect for nebulae and galaxies

Minimized tracking errors – Short exposures reduce the need for precise guiding

However, there are trade-offs:

Camera selection is critical – Large sensors block incoming light, creating diffraction spikes

Collimation becomes more sensitive – Requires careful adjustment to maintain sharp stars

Removes the secondary mirror – Limits ability to switch quickly between visual and HyperStar imaging

Despite these challenges, HyperStar transforms the EdgeHD 11 into a powerful wide-field imaging system, allowing it to rival refractors for nebulae and large galaxies.

Addressing Common Issues with the EdgeHD 11

While the EdgeHD 11 delivers exceptional optical quality, there are a few inherent issues to consider:

1. Mirror Flop and Focus Shift

Issue: The primary mirror moves when focusing, causing the image to shift or drift.

Solution: Use the built-in mirror locks during imaging and consider adding a Crayford-style external focuser.

2. Long Focal Length and Mount Stability

Issue: At 2800mm, even small tracking errors can ruin long exposures.

Solution: Use a high-quality equatorial mount with auto-guiding. An off-axis guider (OAG) is recommended over a guide scope.

3. Cooling and Dew Control

Issue: SCTs take time to reach ambient temperature, and dew buildup is common.

Solution: Use active cooling fans and a dew heater to prevent moisture accumulation on the corrector plate.

Final Thoughts: Who Is the EdgeHD 11 For?

The Celestron EdgeHD 11” is a telescope for serious astronomers—whether you’re into high-resolution planetary imaging, deep-sky astrophotography, or ultra-fast HyperStar imaging. Its versatility is unmatched, but it requires a solid mount, careful collimation, and proper accessories to perform at its best.

For those willing to invest in a capable mount and guiding system, the EdgeHD 11 provides stunning astrophotography potential and incredible visual views. Whether capturing the intricate details of Jupiter’s storms or the delicate structures of distant nebulae, this telescope delivers.

Support MacObservatory by visiting Agena Astro to find accessories and equipment for your EdgeHD 11!”

A few of the images I’ve shot with this telescope

Polar Alignment Guide for Motorized Telescope Mounts

Planetary System Stacker now has a launcher installer

As some of you know I’ve recently put a second telescope out in a remote site. This time StarFront Observatories. In the Discord one day, I noticed a fellow imager Nick Kohrn mention he develops Mac apps. On a whim, I asked him if he wouldn’t mind taking a look at Planetary System Stacker, and open source Planetary Stacking application that gives on par results to AutoStakkert on the PC. PSS has been around for a number of years, but is a Python based application, which can run on the Mac if you’re familiar enough with the command line to install it. So for a lot of people it’s been out of reach for every day use.

Nick graciously took a look and found he could make an installer/launcher application for PSS, and it took him only about a day to put it together.

I’m happy to report that it installs and runs smoothly with the click of a button. Instead of downloading the original PSS files and installing all the dependencies through the terminal, you only need to download the ZIP of Nicks launcher and place it in your applications folder.

Follow Nicks guide to approve the Mac app in Apple’s security settings, and then the installer takes over. It does take about 5-10 minutes for the initial install, but post installation launches are immediate.

I also HIGHLY recommend you follow Rolf Hempel’s PDF documentation for how to use the application, there’s a slight learning curve to achieving the best results.

Selecting % of images to stack.

Place your alignment grid.

Results of alignment.

Wavelets processing, so this application also replaces Registax from the PC world.

Image details